How to score OKRs

The art of metricizing clear, measurable key results.

At Coda, we use Objectives and Key Results (OKRs) to set goals and measure progress. It’s proved to be a good way to achieve our biggest goals. Teams plan quarterly sprints, set goals for the sprint, and evaluate success at the end. But, crafting clear, measurable key results can be tricky. At times it’s easy to ask: “Are we really measuring what matters?” We initially developed this guide and associated tables as a resource within the team at Coda. The goal of this is to show how we prioritize metrics based on their level of impact, and to increase clarity and focus for teams. We hope this might help you, too. In many cases, key results already have metrics, but they are not always consistently structured. At Coda, we track key results in a company-wide table so anyone on any team can understand what others are working on, and how they will know if they were successful. Each key result has a metric column to capture what that key result seeks to move and by how much. We maintain a Maslow’s Hierarchy of metrics: Impact > Outcome > Action. While we all strive to prove the impact of our work, sometimes it’s hard or impossible. Feature launches should have at least outcome metric (which means logging + dashboards present). Longer projects that span multiple sprints or quarters are sometimes hard to graduate to outcome or impact metrics before they launch, and tend to stick with action metrics. Here are some examples of real key result metrics we’ve had at Coda and how they could be reimagined as impact metrics, outcome metrics, action metrics, or without any metric.

Example KR metrics

Examples of real key result metrics we’ve had at Coda.

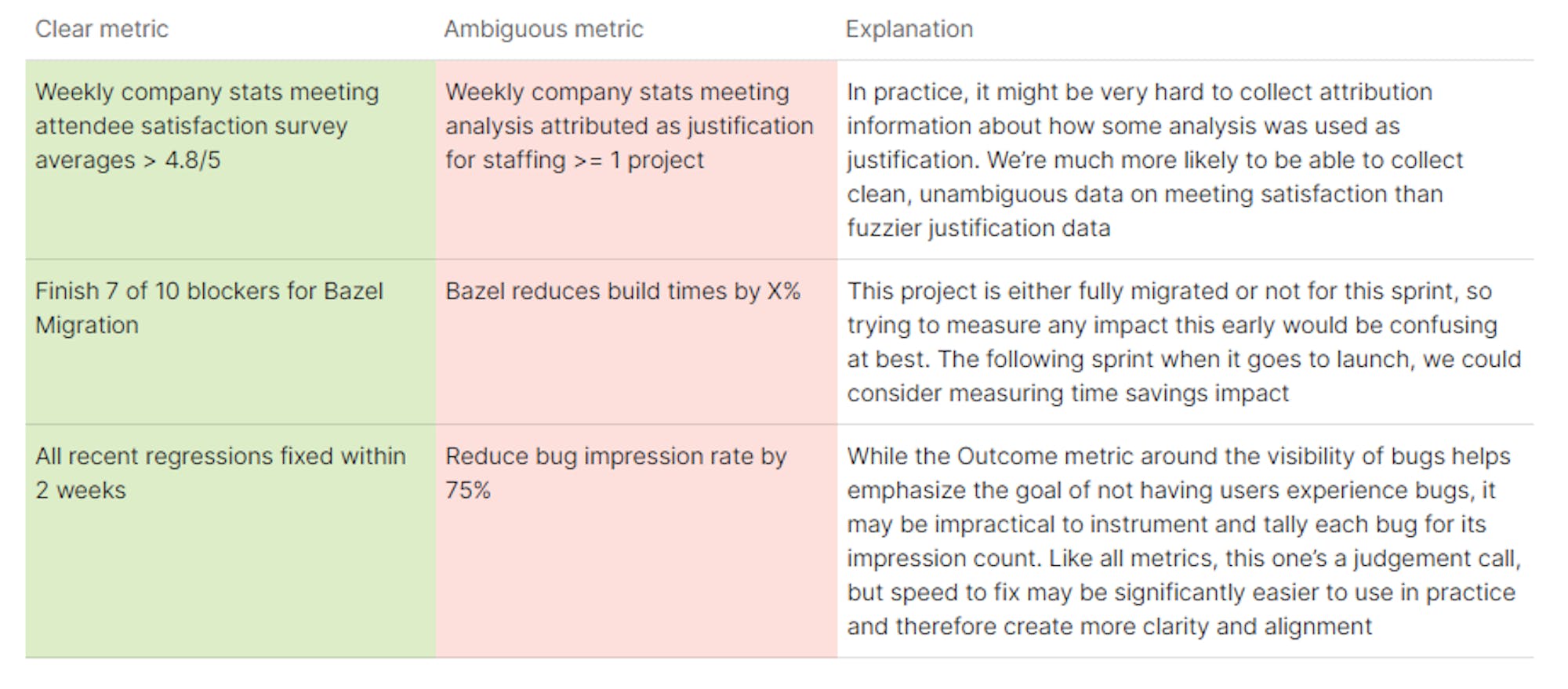

Clear metrics > ambiguous or impractical metrics

A few examples where a clear “weaker” metric is preferable to an ambiguous metric.

- Focus on having clear objective metrics over ambiguous impractical metrics.

- Seek to measure impact, or if not, then outcomes, or if not, then actions.

- Use your metrics to tell the story of your project and its aspirations, and to reflect on what worked or didn’t.