Add sync tables¶

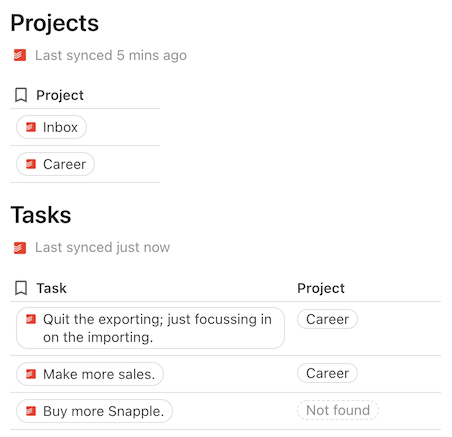

Tables are one of the most powerful features of Coda, and many times people use them to track items that are also represented in other systems. For example, you may have a table of upcoming events, each of which is already listed in a company calendar. Sync tables allow you to define a special type of table where the rows are automatically synced from an external data source such as an API.

Using sync tables¶

Sync tables are added directly to the document, usually by dragging them in from the side panel. Navigate to Insert > Packs > {Pack name} and drag the table into the canvas.

If the sync table doesn't have any required parameters it will start syncing immediately, otherwise you'll have to configure it first. The data in the table can be synced manually or set up to sync automatically at regular intervals.

Multiple syncs

Each sync table can only be added once to a document. If you want to sync data from multiple accounts or with different parameters you can add additional syncs to the table. In the table options pane click the Add another sync button and configure the settings. The results from all syncs will be appended to the same table, but you can use views and filters on that table to display them separately.

The data in the table is synced with the external source automatically on a schedule (at most hourly). In the table's Options pane users can set the sync frequency or start a manual sync. It is not possible to configure a button or automation to start a sync.

Creating a sync table¶

A sync table consists of three main parts: the schema for each row of data, the sync table definition, and the formula that populates the table.

Define the schema¶

Each row in a sync table corresponds to an item in an external data source. For example, the Todoist sample has one sync table for tasks and another for projects. For each table you must define a schema that represents the data you'll be syncing for that item. See the Schemas guide for more information about defining and using schemas.

The item is displayed as a chip in the first column of the sync table, and it contains the complete set of synced data. Properties of the schema designated as featuredProperties are automatically broken out into their own columns, but these can be removed or added to by the user later.

The schema used in a sync table can be shared by other building blocks in your Pack, like a formula or column format. Even when the schema is only used by the sync table, we recommend you define it as a separate variable in your code for readability and future reuse.

const TaskSchema = coda.makeObjectSchema({

// ...

});

Dynamic schemas

For some data sources it isn't possible to know the shape of the data upfront, and it can only be determined at runtime. In these cases instead of defining your schema upfront you can generate it dynamically. See the Dynamic sync tables guide for more information.

Define the sync table¶

The sync table itself is defined using the addSyncTable() method:

pack.addSyncTable({

name: "Tasks",

schema: TaskSchema,

identityName: "Task",

formula: {

// ...

},

});

It includes the name of the sync table, the schema for each row, and other metadata. The identityName property will be explained in the Identity section below.

Write the sync formula¶

Inside each sync table definition is a formula definition, detailing the hidden formula used to sync data into the sync table. If you aren't already familiar with creating formulas, read the Formulas guide first.

pack.addSyncTable({

// ...

formula: {

name: "SyncTasks",

description: "Sync the user's tasks.",

parameters: [],

execute: async function ([], context) {

// ...

},

},

});

This formula fetches items from the external data source, adjust them to fit the schema, and returns them. The return value must be a SyncFormulaResult, which is an object where the result property contains the array of item objects.

pack.addSyncTable({

// ...

formula: {

// ...

execute: async function ([], context) {

let url = "<URL to pull data from>";

let response = await context.fetcher.fetch({

method: "GET",

url: url,

});

let items = response.body.items;

// Adjust the items to fit the schema if required.

return {

result: items,

};

},

},

});

On each sync your code must return the full set of items, and Coda will determine how to update existing rows, remove old rows, etc. This could take a long time for large datasets or slow APIs, and the Long-running syncs section has more information on how to handle this.

Naming¶

The name of a sync table is visible to the user, and can only contain letters, numbers, and underscores. We recommend following these conventions:

- Select a plural noun corresponding to the item represented by each row. For example,

ProductsorSales. - For multiple words, use upper camel case. For example,

BugReports. - Don't include the Pack name in the name of the table. For example, use

Tasksinstead ofTodoistTasks. - Don't use adjectives in sync table names. For example,

ActiveProductsorRecentSales.

In these cases it is better to have a single sync table for all items of the same type, and then add parameters that allow users to filter by certain criteria.

In some areas of the UI, such as the settings pane, the name will be rewritten to add spaces ("BugReports" => "Bug Reports") for readability.

Warning

Changing the name of a sync table will break any existing docs that use it. When creating your Pack select your names carefully.

Identity¶

Every sync table is required to specify an identityName, which is a unique identifier for the table. By convention this is usually the singular form of the name of the item being synced. For instance, a "Tasks" table would use an identity name of "Task". This identity name is used to reference the table when creating row references or updating rows with actions.

By default the identity name is also used as the column name for the first column of the sync table, which contains the synced item as a chip. You can use a different name for the column by setting dynamicOptions.entityName on the sync table.

Parameters¶

The parameters defined on the sync formula are exposed to users as criteria in the sync table options. See the parameters guide for more information about how parameters are defined and displayed.

In most sync tables, parameters are used to allow users to filter the results in the sync table. Although users can always add filters to the resulting table to hide certain rows, it's faster and simpler to do that filtering in the sync formula itself.

Row limits¶

Each sync table has a user-defined maximum number of rows, which defaults to 1000 but can be set as high as 10,000. Once a sync table reaches the limit Coda will stop the sync (even if the code returned a continuation) and truncate the resulting rows to fit within the limit.

Long-running syncs¶

The sync formula that populates a sync table will timeout after a minute, but for some data sources it may take longer to retrieve all the results. In these cases you can utilize the continuation mechanism of sync tables to break your sync up into multiple executions. A continuation is like a save point, allowing you to record where you left off and then pick up again later.

If at the end of an execution there are more items left to sync, return a custom continuation object along with the synced items. Coda will then re-run your sync formula, passing in the previous continuation object. The synced items from each execution will be appended to the table. This process will continue until no continuation object is returned or the table reaches the row limit.

flowchart TD

subgraph coda [ ]

run(Run sync formula)

table(Sync table)

exists{Exists?}

subgraph pack [Pack]

request(Fetcher request)

response(Response)

results(Results)

continuation(Continuation?)

end

end

start(Start sync)

complete(Sync complete)

start --> run

run --> pack

request --> response

response --> results -- Append --> table

response --> continuation --> exists

exists -- No --> complete

exists -- Yes\nSet as context.sync.continuation --> runThe contents of the continuation are flexible and will depend on the API to the external data source, but usually involve offsets or page tokens. The continuation must be an object of type Continuation, which allows for storing string and number values. Continuations are not designed for persisting large amounts of data between executions, so we recommend against using a task queue pattern.

pack.addSyncTable({

// ...

formula: {

// ...

execute: async function ([], context) {

let previousContinuation = context.sync.continuation;

// If there is a previous continuation, pick up where you left off.

// Otherwise start from the beginning.

// Sync some items...

let nextContinuation;

// Determine if there are more items left to sync...

if (moreItemsLeft) {

nextContinuation = {

// Save your place...

};

}

return {

result: items,

continuation: nextContinuation,

};

},

},

});

Two-way sync¶

By default the sync process used by sync tables is one-way only; data is pulled in from an external source and is displayed in a read-only column. However, if the external API supports it you can utilize the two-way sync feature to allow users to edit column values directly in the sync table and then push those changes back to the original data source.

Learn more about this approach in the two-way sync guide.

Referencing rows from other sync tables¶

It's often the case that the different synced items in a Pack are related to each other. For example, a Pack may have sync tables for projects and tasks, where each task belongs to a single project. Using references you can connect these two tables together. References in sync tables work like relation columns in regular tables.

A reference must specify the identity of the target table as well as the ID of the target row. If that row has already been synced to the doc, then the reference is replaced with the data from that row. Otherwise a grayed out chip is displayed, indicating that the referenced row hasn't been synced yet.

See the Schemas guide for more information on how to create references in your table schema.

Account-specific fields¶

Some APIs vary the shape of the data returned based on the account being accessed. For example, an issue tracker may allow users to define custom fields for their bug reports, which the API also returns. A sync table must have a defined schema that represents the data for each item, but it is possible to expand that schema to accommodate these variations by using a dynamic schema. See the Dynamic sync tables guide for more information on how to use this feature.

Caching & recalculation¶

A sync table is not a live view into an external data source, but rather a snapshot of the data stored at the time of the last sync. Unlike formulas which are recalculated automatically when the parameters change, changes to sync table parameters will only be reflected during the next sync.

It's recommended that you reduce or disable HTTP caching of the fetcher requests used to populate your sync table. When users manually resync a table they expect the latest results, and HTTP caching can interfere with that. Caching may still be appropriate for requests that retrieve the same data during each sync formula execution.

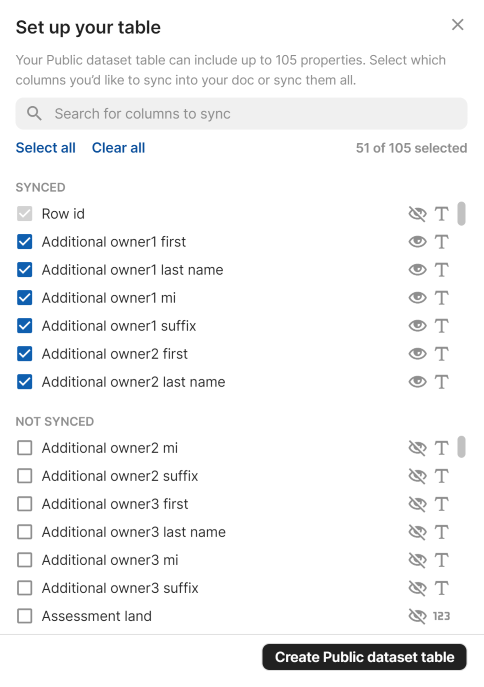

Columns selection¶

Although only featured columns are shown in the table by default, all of the schema properties are synced and stored in the table as their own columns. Additionally, the object chip in the first column of the sync table provides quick access to view and formulaically access the value of all properties.

For very large schemas all of these unused properties can come with a performance cost however, so users have the option to choose the exact set of columns they want to sync. This can be done by clicking the Sync more properties button in the sync table settings, and is launched automatically when creating the sync table with a very large schema.

Users can choose from top-level properties in the schema, and only those they select will be persisted in the document during the sync.

While there is no harm in your Pack retrieving properties that won't be persisted, in some cases you can optimize your sync if you know the exact set of fields the user is requesting. This can be determined by inspecting the value of context.sync.schema. This will be a copy of the original schema, but with only properties that the user selected.

Since the properties themselves may use the fromKey option to load their value from a different field in the row objects, it can be somewhat involved to map the properties back to API fields. To assist with this there is a helper function coda.getEffectivePropertyKeysFromSchema() that will do the conversion for you.

Example: Open Data NY sync table

import * as coda from "@codahq/packs-sdk";

export const pack = coda.newPack();

// The domain to connect to. Can be swapped for other domains hosting Socrata.

const Domain = "data.ny.gov";

// The max number of columns to include in the sync table by default.

const MaxFeaturedColumns = 50;

// How many rows to scan when determining the which columns to feature.

const TableScanMaxRows = 100;

// How many rows to fetch per-page.

const PageSize = 100;

// The maximum number of datasets to return in a search.

const MaxDatasets = 10000;

// A regular expression matching a dataset.

const DatasetUrlRegex = new RegExp(`^https?://${Domain}/.*/([^?#]+)`);

// Schema for an address (part of a location).

const AddressSchema = coda.makeObjectSchema({

properties: {

address: { type: coda.ValueType.String },

city: { type: coda.ValueType.String },

state: { type: coda.ValueType.String },

zip: { type: coda.ValueType.String },

},

displayProperty: "address",

});

// Schema for a location (used for locations and points).

const LocationSchema = coda.makeObjectSchema({

properties: {

coordinates: {

type: coda.ValueType.Array,

items: { type: coda.ValueType.Number },

},

latitude: { type: coda.ValueType.Number },

longitude: { type: coda.ValueType.Number },

address: { ...AddressSchema, fromKey: "human_address" },

},

displayProperty: "coordinates",

});

// A mapping from Socrata types to Coda schemas.

const TypeSchemaMap: Record<string, coda.Schema> = {

text: { type: coda.ValueType.String },

number: { type: coda.ValueType.Number },

checkbox: { type: coda.ValueType.Boolean },

calendar_date: {

type: coda.ValueType.String,

codaType: coda.ValueHintType.Date,

},

location: LocationSchema,

point: LocationSchema,

url: { type: coda.ValueType.String, codaType: coda.ValueHintType.Url },

};

// A base row schema, extended for each dataset.

const BaseRowSchema = coda.makeObjectSchema({

properties: {

rowId: { type: coda.ValueType.String, fromKey: ":id" },

},

idProperty: "rowId",

displayProperty: "rowId",

});

// Allow requests to the domain.

pack.addNetworkDomain(Domain);

// Use a system-wide application token to get additional quota.

// https://dev.socrata.com/docs/app-tokens.html

pack.setSystemAuthentication({

type: coda.AuthenticationType.CustomHeaderToken,

headerName: "X-App-Token",

});

// A dynamic sync table for the rows of a dataset.

pack.addDynamicSyncTable({

name: "PublicDataset",

identityName: "DatasetRow",

entityName: "Row",

// If new columns are added later, don't automatically feature them.

defaultAddDynamicColumns: false,

// Allow the user to browse the datasets by category.

listDynamicUrls: async function (context, category) {

if (!category) {

// Return the list of categories.

let categories = await getCategories(context);

return categories.map(category => {

return {

display: category,

value: category,

hasChildren: true,

};

});

}

// Return all the datasets in that category.

let datasets = await searchDatasets(context, {

categories: category,

only: "datasets",

domains: Domain,

search_context: Domain,

order: "page_views_last_month",

limit: MaxDatasets,

});

if (!datasets?.length) {

return [];

}

return datasets.map(dataset => {

return {

display: dataset.name,

value: dataset.link,

};

});

},

searchDynamicUrls: async function (context, search) {

let datasets = await searchDatasets(context, {

q: search,

only: "datasets",

domains: Domain,

search_context: Domain,

order: "relevance",

limit: MaxDatasets,

});

if (!datasets?.length) {

return [];

}

return datasets.map(dataset => {

return {

display: dataset.name,

value: dataset.link,

};

});

},

getName: async function (context) {

let dataset = await getDataset(context);

return dataset.name;

},

getSchema: async function (context) {

let dataset = await getDataset(context);

// Copy the base schema.

let schema: coda.GenericObjectSchema = {

...BaseRowSchema,

};

// Add a schema property for each column.

for (let column of dataset.columns) {

let name = column.name;

let fieldName = column.fieldName;

let dataType = column.dataTypeName;

let description = column.description;

if (fieldName.startsWith(":")) {

// Skip internal fields.

continue;

}

let fieldSchema = TypeSchemaMap[dataType];

if (!fieldSchema) {

throw new Error("Couldn't find schema for column type: " + dataType);

}

schema.properties[fieldName] = {

...fieldSchema,

displayName: name,

description: description,

};

}

// Determine which columns to feature.

schema.featuredProperties = await getFeatured(dataset, context);

// Add attribution information.

schema.attribution = getAttribution(dataset);

return schema;

},

getDisplayUrl: async function (context) {

return context.sync.dynamicUrl;

},

formula: {

name: "SyncDataset",

description: "Syncs the dataset.",

parameters: [

coda.makeParameter({

type: coda.ParameterType.String,

name: "search",

description: "If specified, only rows containing this search term " +

"will be included.",

optional: true,

}),

coda.makeParameter({

type: coda.ParameterType.String,

name: "filter",

description: "A SoQL $where clause to use to filter the results. " +

"https://dev.socrata.com/docs/queries/where.html",

optional: true,

}),

],

execute: async function ([search, filter], context) {

let dataset = await getDataset(context);

let offset = context.sync.continuation?.offset as number || 0;

// Only fetch the selected columns.

let fields = coda.getEffectivePropertyKeysFromSchema(context.sync.schema);

// Fetch the row data.

let baseUrl = `https://${Domain}/resource/${dataset.id}.json`;

let url = coda.withQueryParams(baseUrl, {

$select: fields.join(","),

$q: search,

$where: filter,

$limit: PageSize,

$offset: offset,

});

let response = await context.fetcher.fetch({

method: "GET",

url: url,

});

let rows = response.body;

// Transform the rows to match the schema.

for (let row of rows) {

for (let [key, value] of Object.entries(row)) {

row[key] = formatValue(value);

}

}

let continution = null;

if (rows.length > 0) {

// Keep fetching rows until we get an empty page.

continution = { offset: offset + PageSize };

}

return {

result: rows,

continuation: continution,

};

},

},

});

/**

* Reformat a row value to match the schema.

*/

function formatValue(value) {

if (typeof value === "object") {

let obj = value as Record<string, any>;

if (obj.url) {

// Pull up the URL.

value = obj.url;

} else if (obj.type === "Point") {

// Format point to LocationSchema.

value = {

latitude: obj.coordinates[1],

longitude: obj.coordinates[0],

// A point's coordinates are returned as x,y instead of lat,long.

coordinates: obj.coordinates.reverse(),

};

} else if (obj.latitude && obj.longitude) {

// Format location to LocationSchema.

value = {

...obj,

coordinates: [obj.latitude, obj.longitude],

};

}

}

return value;

}

/**

* Get the list of dataset categories.

*/

async function getCategories(context: coda.ExecutionContext):

Promise<string[]> {

let baseUrl = `https://${Domain}/api/catalog/v1/domain_categories`;

let url = coda.withQueryParams(baseUrl, {

domains: Domain,

});

let response = await context.fetcher.fetch({

method: "GET",

url: url,

});

return response.body.results.map(result => result.domain_category);

}

/**

* Search for datasets, using a flexible set of parameters.

*/

async function searchDatasets(context: coda.ExecutionContext,

params: Record<string, any>): Promise<DatasetResult[]> {

let url = coda.withQueryParams(`https://${Domain}/api/catalog/v1`, params);

let response = await context.fetcher.fetch({

method: "GET",

url: url,

});

return response.body.results.map(result => {

return {

...result.resource,

...result,

};

});

}

/**

* Get a dataset by ID.

*/

async function getDataset(context: coda.SyncExecutionContext):

Promise<Dataset> {

let datasetUrl = context.sync.dynamicUrl;

let datasetId = getDatasetId(datasetUrl);

let url = `https://${Domain}/api/views/${datasetId}.json`;

let response = await context.fetcher.fetch({

method: "GET",

url: url,

});

return response.body;

}

/**

* Extract the ID of the dataset from its URL.

*/

function getDatasetId(url: string): string {

let match = url.match(DatasetUrlRegex);

if (!match) {

throw new coda.UserVisibleError("Invalid dataset URL: " + url);

}

return match[1];

}

/**

* Determine which rows to feature (include in the table by default) for a given

* dataset.

*/

async function getFeatured(dataset: Dataset, context: coda.ExecutionContext):

Promise<string[]> {

// Fetch some of the first rows from the dataset.

let baseUrl = `https://${Domain}/resource/${dataset.id}.json`;

let url = coda.withQueryParams(baseUrl, {

$limit: TableScanMaxRows,

});

let response = await context.fetcher.fetch({

method: "GET",

url: url,

});

let rows = response.body;

// Count how many times each column has a value.

let columnCount: Record<string, number> = {};

for (let row of rows) {

for (let [key, value] of Object.entries(row)) {

if (!columnCount[key]) {

columnCount[key] = 0;

}

if (value) {

columnCount[key]++;

}

}

}

// Return the list of columns that have at least one value in the scanned

// rows, up to a defined maximum.

return dataset.columns.map(column => column.fieldName)

.filter(column => columnCount[column] > 0)

.filter(column => !column.startsWith(":"))

.slice(0, MaxFeaturedColumns);

}

/**

* Get the attribution node for a given dataset.

*/

function getAttribution(dataset: Dataset): coda.AttributionNode[] {

if (!dataset.attribution) {

return null;

}

let node;

if (dataset.attributionLink) {

node = coda.makeAttributionNode({

type: coda.AttributionNodeType.Link,

anchorText: dataset.attribution,

anchorUrl: dataset.attributionLink,

});

} else {

node = coda.makeAttributionNode({

type: coda.AttributionNodeType.Text,

text: dataset.attribution,

});

}

return [node];

}

// A dataset search result.

interface DatasetResult {

name: string;

link: string;

}

// The dataset metadata.

interface Dataset {

id: string;

name: string;

description: string;

columns: DatasetColumn[];

attribution: string;

attributionLink: string;

}

// A dataset column definition.

interface DatasetColumn {

name: string;

description: string;

fieldName: string;

dataTypeName: string;

}