Share

Explore

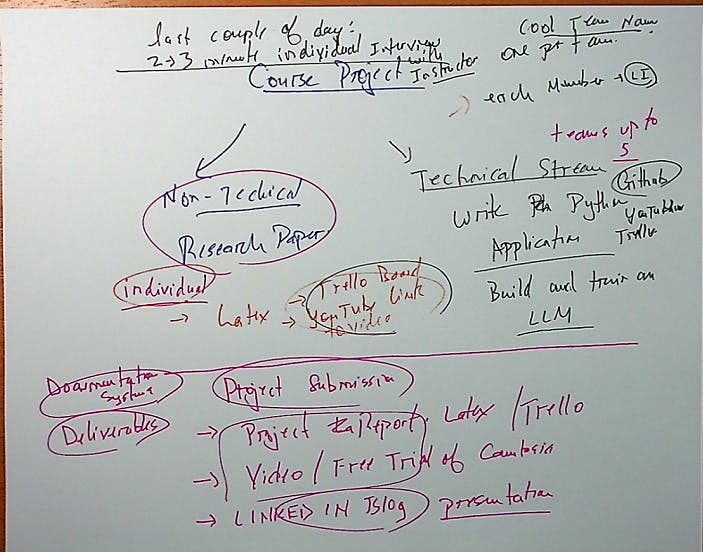

Summer 23 Project Document AML 3304 Course Project Writing Python to construct a Simple Generative AI Language Model

DUE DATE is Week 13:

Last edited 157 days ago by System Writer

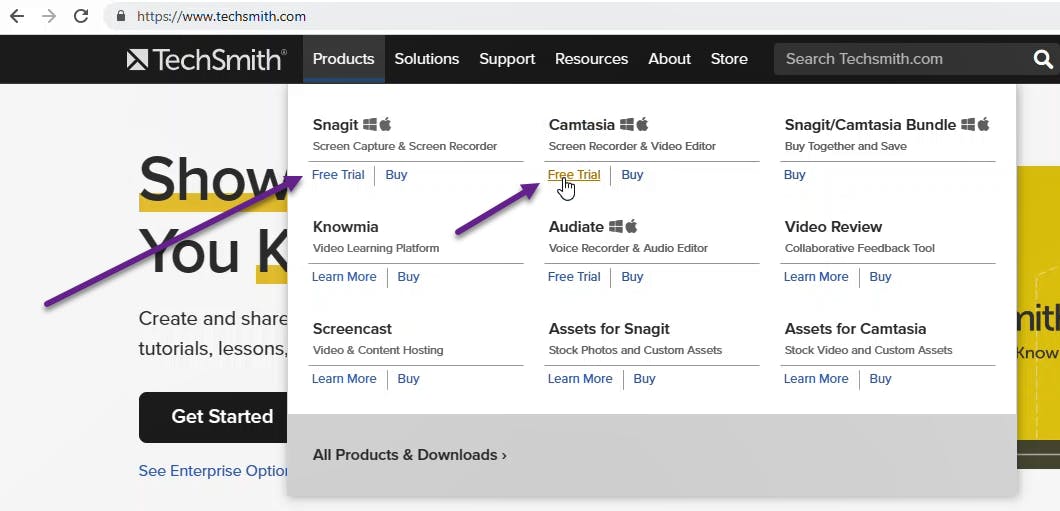

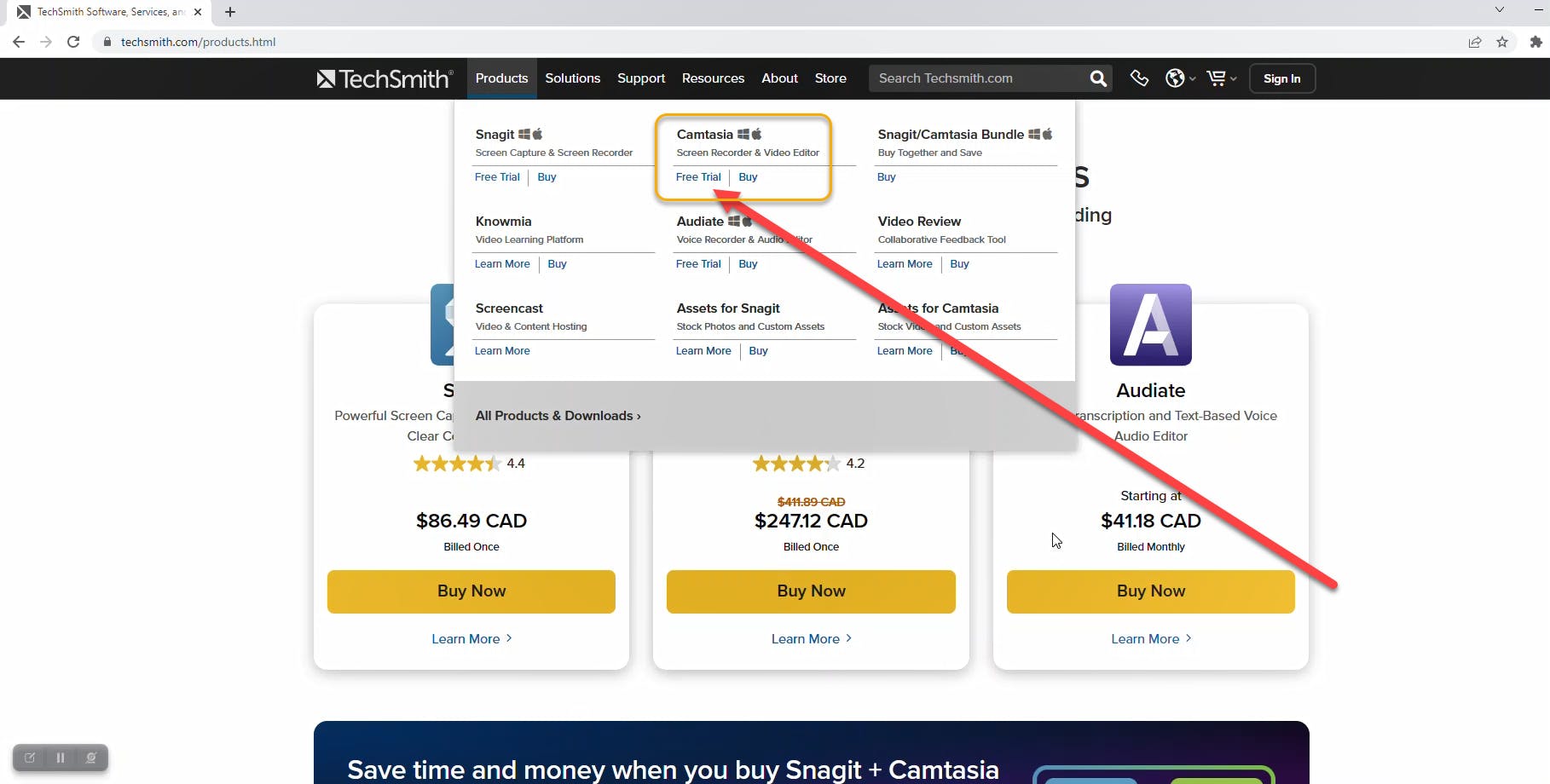

Steps to make a Video:

Using to make a Video:

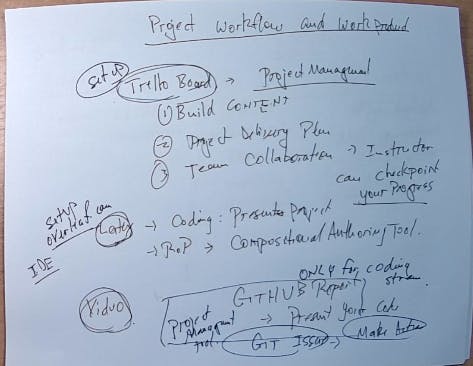

Deliverable Requirements and Submission Format for the Coding Project.

Make a PowerPoint storyboard to be the backdrop for your Video: Name it as TeamName.pptx [Upload your PowerPoint Story Board also.]

You are doing this to let Employers see the great work you are doing!

Research Paper Stream:

Upload your Paper to:

Here are elements to address for the Research Paper:

Welcome to the lab workbook for AML 3304, where you will learn how to build a simple generative AI language model similar to CHAT GPT.

This lab workbook will guide you through the process of building your first language model, step-by-step.

Prerequisites

Step 1: Installing Required Libraries

Step 2: Data Collection and Preprocessing

The next step is to collect and preprocess the data.

Step 3: Building the Language Model

Lecture Notebook: The Role and Use of Neural Network with a Single LSTM Layer

Introduction

Long Short-Term Memory (LSTM)

LSTM Architecture

Using LSTMs for Natural Language Processing

Conclusion

Is LONG SHORT TERM MEMORY IS used in chatgpt

Step 4: Training the Language Model

Step 5: Generating Text

Conclusion

Step 1: Installing Required Libraries

TensorFlow

Keras

NumPy

Pandas

Matplotlib

Step 2: Data Collection and Preprocessing

NLTK

Step 3: Building the Language Model

LSTM

Step 4: Training the Language Model

Compile and Fit

Step 5: Generating Text

Sampling

Conclusion

Tabulate the benefits of each language model.

Want to print your doc?

This is not the way.

This is not the way.

Try clicking the ⋯ next to your doc name or using a keyboard shortcut (

CtrlP

) instead.