Share

Explore

Lecture: Data Science Concepts, Tools, and Math: Bayesian Math and Generative AI Programs

Baysian Models are prediction-building engines. They work by applying statistical mathematics in PYTHON Algorithms.

Data Science: The Art of Transforming Raw Data into Knowledge, Wisdom and Insight

A. Data Science Concepts

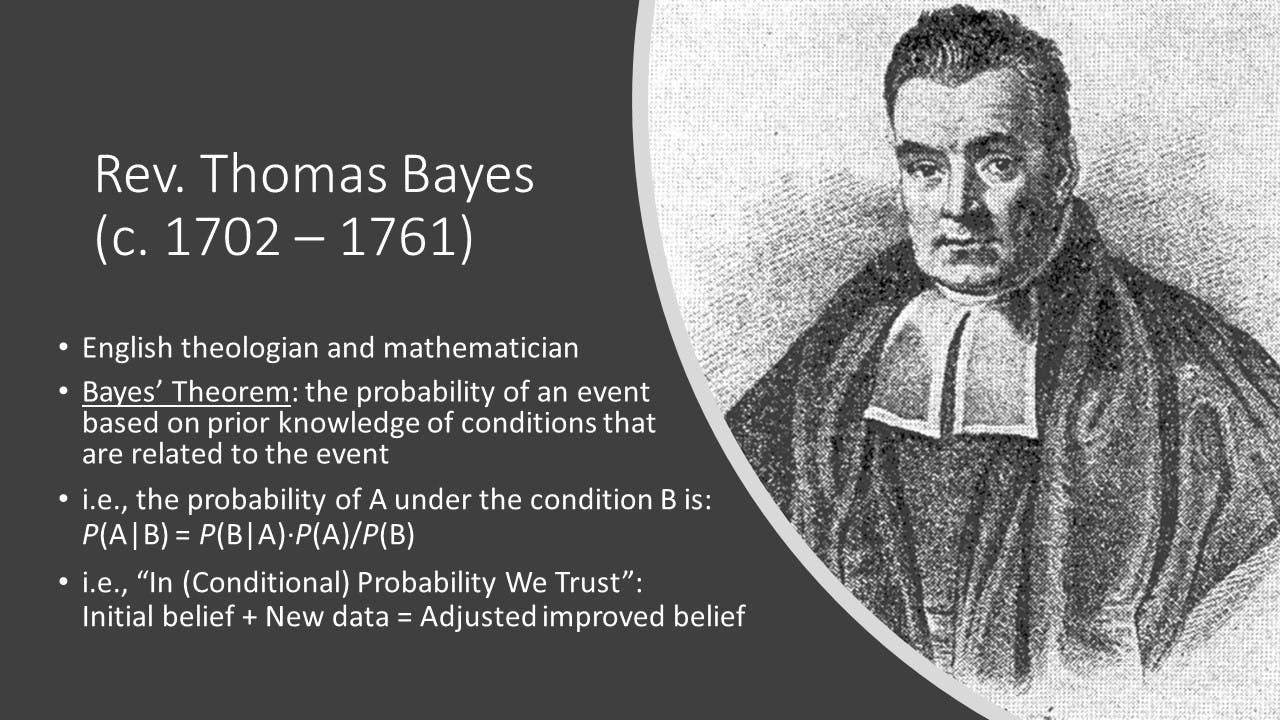

How PyTorch uses and applies Bayesian mathematics, specifically through the Pyro library. Pyro is a companion library for PyTorch that enables probabilistic programming on neural networks written in PyTorch [1].

import torch

import pyro

import pyro.distributions as dist

def linear_regression(x, w, b, noise_stddev):

y = w * x + b

return y + torch.normal(0, noise_stddev, size=y.shape)

def model(x, y):

w_prior = dist.Normal(0, 1)

b_prior = dist.Normal(0, 1)

noise_stddev_prior = dist.HalfNormal(1)

w = pyro.sample("w", w_prior)

b = pyro.sample("b", b_prior)

noise_stddev = pyro.sample("noise_stddev", noise_stddev_prior)

y_hat = linear_regression(x, w, b, noise_stddev)

pyro.sample("y", dist.Normal(y_hat, noise_stddev), obs=y)

def guide(x, y):

w_loc = pyro.param("w_loc", torch.tensor(0.0))

w_scale = pyro.param("w_scale", torch.tensor(1.0), constraint=dist.constraints.positive)

b_loc = pyro.param("b_loc", torch.tensor(0.0))

b_scale = pyro.param("b_scale", torch.tensor(1.0), constraint=dist.constraints.positive)

noise_stddev_loc = pyro.param("noise_stddev_loc", torch.tensor(1.0), constraint=dist.constraints.positive)

w = pyro.sample("w", dist.Normal(w_loc, w_scale))

b = pyro.sample("b", dist.Normal(b_loc, b_scale))

noise_stddev = pyro.sample("noise_stddev", dist.HalfNormal(noise_stddev_loc))

from pyro.infer import SVI, Trace_ELBO

# Generate synthetic data

x = torch.randn(100)

y = 3 * x + 2 + torch.normal(0, 0.5, size=x.shape)

# Set up the optimizer and the inference algorithm

optimizer = pyro.optim.Adam({"lr": 0.01})

svi = SVI(model, guide, optimizer, loss=Trace_ELBO())

# Train the model

num_iterations = 1000

for i in range(num_iterations):

svi.step(x, y)

B. Data Science Tools

Bayesian Math: A Key to Unlocking Generative AI Programs

Bayes' Theorem

Using Bayesian Math in Building the Generative AI Language Model.

Conclusion

Want to print your doc?

This is not the way.

This is not the way.

Try clicking the ⋯ next to your doc name or using a keyboard shortcut (

CtrlP

) instead.