Skip to content

The project is contextual and relevant to the learners. It solves a problem that is real for them (homework) as opposed to some abstract concept that is hard to relate to.Project-based learning allows learners to develop deep content knowledge and understanding through collaboration and critical thinking. And, because the project solves a problem which is relevant in their own lives, they are intrinsically motivated to engage, ask questions and apply themselves to more challenging tasks.The learners experience a high-level of autonomy - the project’s questions are largely open-ended and encourage student creativity by inviting them to customise the bot in a way that feels exciting to them. They are also using Python to code the AI, a programming language that is very accessible to new coders (it is not as syntax heavy as a lot of other text-based coding languages). This provides a lot more autonomy than block-based coding experiences (which most learners work with in school), because there are many different ways you can code the bot.It opens up a lot of ethical questions for young learners. Just because an AI-bot can do your homework, should you be using AI for that? These kinds of questions naturally arise from this project and means that the learners also get to engage with the tech on a critical and philosophical level too.

It gives learners exposure to a real-world coding environment. This means that when they leave school, they are actually able to apply their coding knowledge in the work environment.It’s mobile first- accessible on mobile, desktop, laptop and tablets/iPads.It is accessible (there is a free product for educational organisations + schools) and is simple to set-up (it’s completely browser-based and requires no downloads).It allows for a multiplayer experience - this is important because it facilitates pair-programming and therefore, peer-learning.

Help the learners become familiar with the coding environment and practice navigating through the different files

Help learners identify the prompt which activates the AIGet learners familiar with interacting with the AI in the console

Get the learners to co-write lists with the AI

The treasure-hunting element assists the learners in becoming familiar with the platform quickly. It helps them understand where they code (in the main file) and where they interact with the AI (in the console). Without this, the learners take longer to navigate the project and take on the next task.There are a few popular topics which learners always use to test the AI’s ability to give outputs - these include food, sports teams and animals.Already from the first project, we could identify a distinct difference between schools with a culture of curiosity versus those without. This would impact the engagement of the learners (and whether they could stay with the more complex challenges for longer), as well as how many tasks they would complete overall.Learners from a curious culture would do things like:Explore the different project files before being promptedClick through and explore Replit to see what else they could find on the platformClick on interactive elements like the “run” button (even before knowing what it was for)Make changes in their code and call us over to help them fix bugs/errors (even before we had started with the actual tasks)Ask questions about their approach rather than their result/outcomesTeachers in the room ask the learners about their project and what they think is happeningTeachers in the room would encourage peer-learningWe could tell when a school had a less curious culture because:The learners were less independent and would wait for instruction before exploring, testing or trying anythingThe learners would call the facilitators over more often to ask if they were doing the task “right”The learners displayed fear in making mistakes and needed a lot of encouragement to learn through trial and errorTeachers in the room would step in or call the facilitators over if a student appeared to be struggling even slightlyTeachers in the room would discourage animated peer-to-peer discussions While the game-like set-up of the project did allow for easier comprehension and quicker understanding of the project set-up, generally most learners needed prompting before exploring the project or testing different things. We think this is largely due to classroom culture.

Help the learners understand their role in prompting AI and how that influences outputsGive the students a hands-on experience of prompt-crafting and personalising their AIAssist the students in co-creating exciting (and tailored) outputs with the AIHelp the students unleash their creativity and test the AI’s limitsAssist them in carrying out and understanding basic Python commands

Students generally take a while to realize that they have agency over the AI and can influence its outputs depending on how they craft their prompt. Even when they notice that the age changes the complexity of the AI’s outputs, they often think that the age is the only parameter which they can use to customize the AI.We also noticed that at the beginning of this project, the learners do not question the AI’s output or why it responds in certain ways - they are more interested in marking off the achievements. It is only once the project asks them to generate more creative outputs that they begin to engage with it differently.Facilitators had to give students examples such as Siri, and how she can tend to be quick-witted or sarcastic. When facilitators explain to students that they have the opportunity to build their own version of a Siri - that’s when it clicks for most students that they can prompt the AI to respond in multiple ways.Interestingly, the learners tend to get really creative in building the AI’s personality and seeing how it responds to certain topics or questions. They enjoy anthropomorphising the AI. This is different to what we have observed in adults who have also done this project - they tend to test the AI’s functionality for different use cases.Students really love customizing their project - from changing the prompt, to choosing their own colour for the text and even making up their own name, this sense of autonomy excites and engages them. They even get quite frustrated when the programme doesn’t have certain colours available to them. They often spend a lot of time on personalisation (as opposed to adults who change the colour once, for example, and are then satisfied that they have completed that task).We think that this sense of ownership increases a sense of achievement and therefore also increases engagement.This project is also where most of the students have their “Aha!” moments (we define these as moments where: learners discover something new about themselves or the world, or where they persevere through something challenging). This is where they start to understand that AI is built and influenced by human input.Most of the code in this project is already set-up for them and the students have to identify where they need to make adjustments to the code in order to personalise the AI. This means that the students learn coding commands as a by-product of engaging with the AI. We think this is why learners who identified as non-coders, would also enjoy the coding aspect of this project.The most common piece of feedback we received from learners was that this project made them realise that coding is a lot more fun than they originally thought.One of the most common pieces of feedback from educators was surprise that the facilitators managed to engage so many learners - even those who are typically disengaged in IT or coding class.

Encourage the learner’s critical thinking to analyze the AI’s outputKick-start thoughts about the ethics behind AIHelp the learners understand how the AI is generating outputsAssist the learners in thinking philosophically about AI

At first, most learners believe that the AI is NOT biasedTo test bias, younger learners ask the AI’s opinion or preference (e.g. they ask it whether it thinks Manchester United is better than Liverpool, or which flavour of ice-cream it thinks is more delicious)Older learners (ages 15 and up) ask the AI more philosophical questions about religion, politics, race or gender (and are very disappointed when our safety filter prevents the AI from giving controversial opinions)Some of the learners thought that the safety filter indicated the AI’s biasOther learners identified that the AI is better at writing in Western languages (like French and English) than it is in African languages and used this as their reason for the AI’s biasWe didn’t have any learners ask us what bias was (even the younger learners)Interestingly, learners who didn’t get to this question in the project would often cite “non-bias” as a positive reason to use AILearners used online plagiarism checkers to test the AI’s output and most were shocked to discover that the percentage of plagiarism was either 0% or close to that of a human.Before this question, a large majority of learners think that the AI is simply Googling answers for its outputs (despite the fact that they get it to give them creative outputs like movie scripts and poems)This is where most of the learners start to understand that the AI is creating the outputs, not simply regurgitating facts or findings from the internet

Do you think the AI could do your homework for you?What do you think are the pros of this technology?All the students agreed that it could make your life a lot easier and leave you with more time to pursue leisure.Some students expressed that the AI could offer more diverse perspectives than a human can.Students who had not completed the third project expressed that the AI would be beneficial because it would be less biased than humans.Many students expressed excitement at the ways that the AI could enrich their own creative practice by offering them diverse ideas (they saw the AI as a tool to support brainstorming).This question would spark debate among the students. Some students would express concern that this could cause laziness or stop students from learning how to do things like write essays. Other students would disagree and say that if they put the effort in to code the AI, they are still learning and applying knowledge.Interestingly, many adults respond to this question by expressing fear of job loss, however this was not the case with young learners.The main fear for students was around cyber-security and what could be done with this technology “if it got into the wrong hands”.

Share

Explore

This year over 3200 learners ages 11 - 16 got to build and play with some of the latest AI technology through Mindjoy’s Hackathons (powered by GPT-3). Here is what we learnt 🧑💻

Artificial Intelligence is fundamentally changing the way we work, engage, and interact with each other, but its impact is largely felt and not fully understood. Giving kids a chance to interact and build with AI will encourage innovation, foster inclusivity, teach critical skills and support young learners in preparing for the future.

Over the past 6 months we’ve run approximately 50 AI hackathons across South Africa, Kenya and the Netherlands with over 3200 learners, ages 11-16. With the goal of exposing learners to fundamental 21st century skills and tools, they had the opportunity to build a series of projects using cutting-edge AI tools like GPT-3.

In this time, we observed a lot of incredible patterns and witnessed the potential of the youth to create with AI.

WHAT EXACTLY IS AI?

How young learners understand it

Today, young people are growing up in a world which is being shaped by AI. Streaming platforms like YouTube, TikTok and Spotify provide recommendations for videos and songs based on your behaviour. E-commerce sites make shopping recommendations based on previous buys. WhatsApp gives you recommendations for the next best word on your keyboard, and AI virtual assistants like Siri or Alexa have become household names.

However, AI is a difficult concept to grasp beyond the forms seen in movies (e.g. human-like robots) because it is designed to operate quietly in the background of daily tasks, working seamlessly in conjunction with other technologies.

When we asked learners what they knew about AI, there was no doubt that this tech is pervasive and used daily (we were confidently told by learners that, “My computer/Phone/iPad uses AI”). However, when we asked them to be specific about HOW their computer/phone uses AI, more often than not, they couldn’t give concrete examples (unless prompted with examples of apps, like YouTube).

In fact, the response we got most often to the question: “Can you give an example of AI?” was robots.

Understanding AI will be a superpower - especially those who learn how to harness this tech as a tool for driving greater innovation, creativity and problem-solving. At Mindjoy, we want every young learner to feel empowered and able to contribute to a future (and present) ruled by AI.

WHY HACKATHONS?

And what exactly is a Hackathon?

Hackathons are a fun and engaging way to offer insight into this tech, by offering a hands-on experience of coding and prompt-crafting. In just 90-minutes, learners were paired up and guided through 3 distinct projects which supported them through coding and prompt-crafting to build their own personalised AI-bot.

The mission: build an AI-bot that can do your homework for you.

In each Hackathon, facilitators illicit insight into the tech through open discussions, coaching questions and a reflection at the end of the session. Healthy competition (with some small prizes to be won) encouraged engagement, and students were encouraged to learn through trial and error.

We can safely say that the majority of learners loved this experience (out of 3200+ learners, we had an average NPS student rating of 8.5 out of 10 ) and here are the main reasons why we think it works:

In our definition, a Hackathon is a timed competition where participants need to solve a common problem with tech. In this case, the learners are solving their homework problem using AI (and having a whole bunch of fun in the process).

Introduction to AI and GPT-3

PROJECT DESIGN

Using cutting-edge technology like GPT-3

In the Hackathons, the students are guided through 3 distinct projects which use GPT-3. GPT-3 (or Generative Pre-trained Transformer 3) is a language model that uses deep learning to produce human-like text. In layman’s terms, it’s a powerful and well-trained text generator that can produce text that reads very similar to natural language, with a small amount of input/guidance. A language model is essentially a type of AI that is trained to guess the next best word or phrase based on the context you give it (e.g. Google Predictive Search or Predictive Text on your phone’s keyboard).

We wanted to use GPT-3 because it gives learners exposure to cutting-edge tech. AI is generally a new field and not yet covered in a lot of schools’ curricula. It’s mainly seen as a subsection to coding and robotics, but we believe it deserves more focus as it is highly prevalent in modern tech and the future working environment.

Using GPT-3, learners are tasked to prompt the AI to do their homework and write things like essays, poems and movie scripts. As they progress through the different projects, they are challenged to think deeper about how the technology is working and how else they might prompt it to give them their desired outputs.

Coding on Replit

All our coding projects are hosted on Replit - an incredibly accessible, cloud-based IDE which allows programmers to code in over 50 different languages from a chrome tab, no setup required AND it’s multiplayer (think google docs for coding). We use this platform because:

Multiplayer Experiences

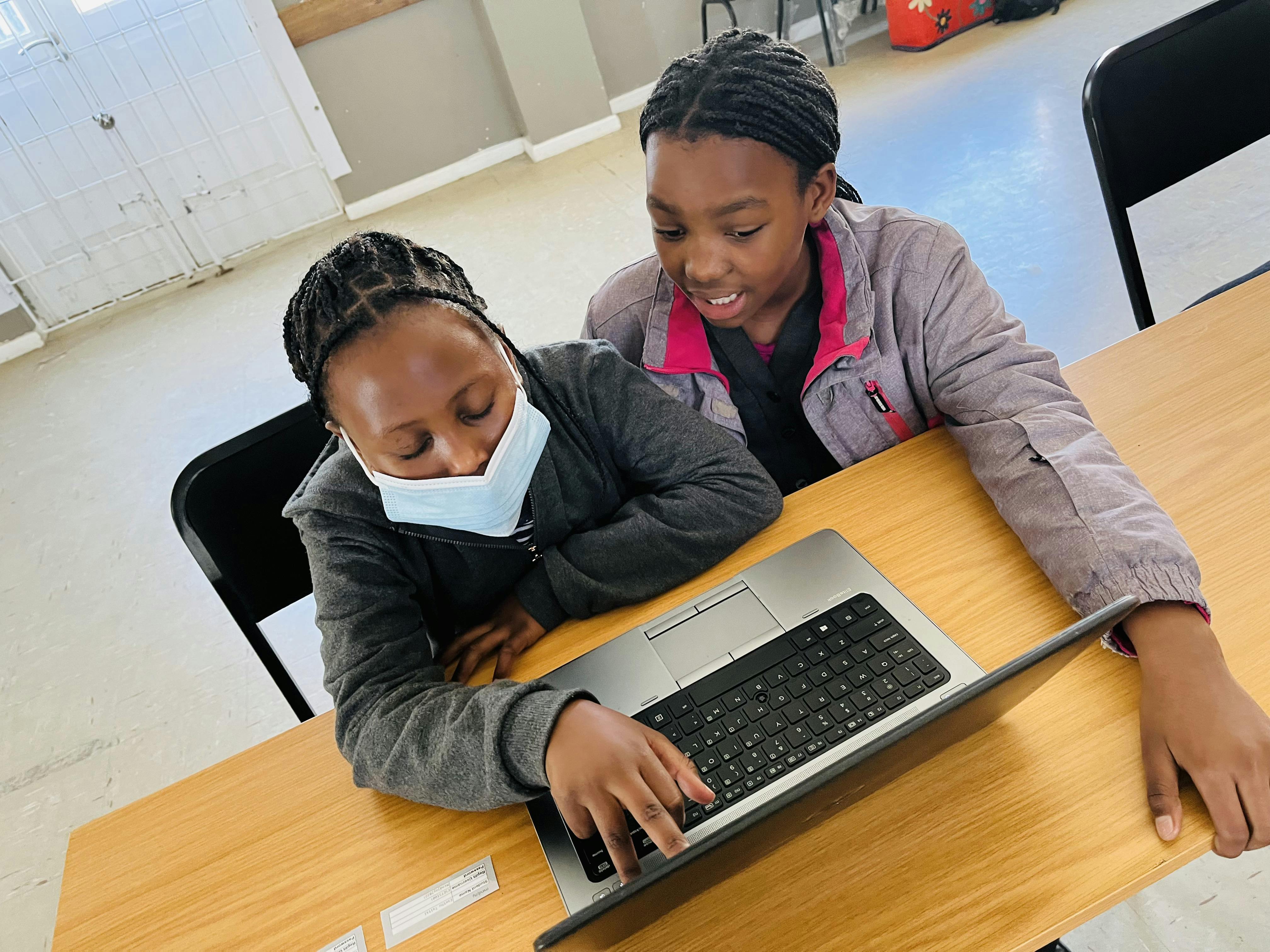

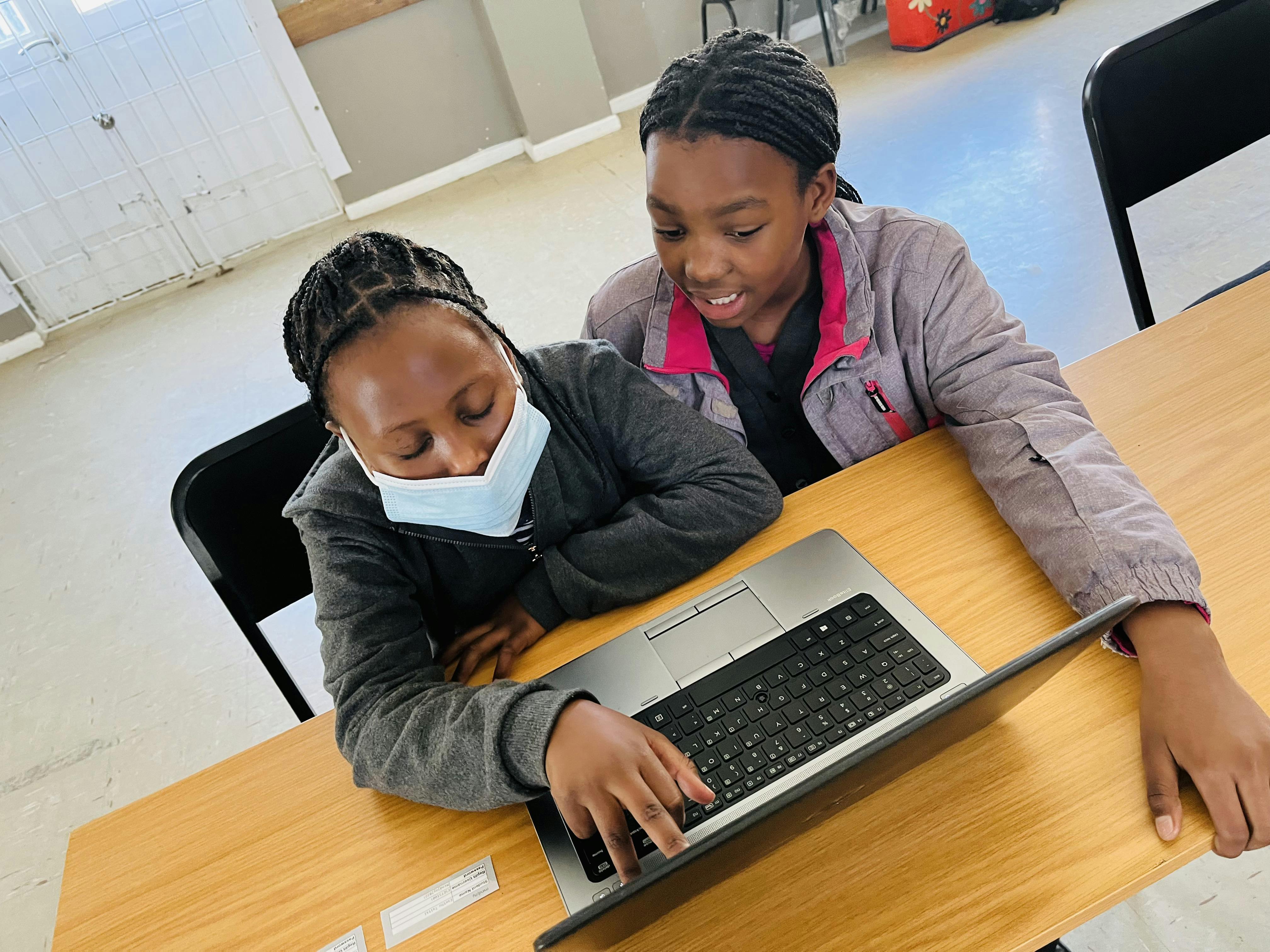

Learning is intrinsically a social experience. We cannot separate what we learn from the context in which we learn it. Peer-to-peer learning is effective in cementing skills like collaboration, communication, teamwork and independence, while also encouraging deeper engagement.

We tested the effectiveness of peer-learning in the Hackathons by first running it as an individual task - where each student worked on their own separate project, and then a multiplayer task.

What we discovered was that the peer-to-peer learning environment improved learner’s experience (our NPS score went from averaging around 7 to 8.5), as well as their engagement and understanding.

With at least 2 brains in a team, the learners could focus more on understanding, as opposed to just solving. Interactivity meant that learners stayed engaged with problems for longer, and retained information and learnings better. Peer-feedback allowed learners to problem-solve with more rigour and find multiple solutions to a problem.

The map file

WHAT WE NOTICED

Trends and patterns that emerged with the learners and how they engaged with the AI

In project one...

The first project is an introduction to GPT-3 called, “Meet the Bot”. This project is set-up like a treasure-hunt, where learners explore the different files to find what they need to get their AI programme working.

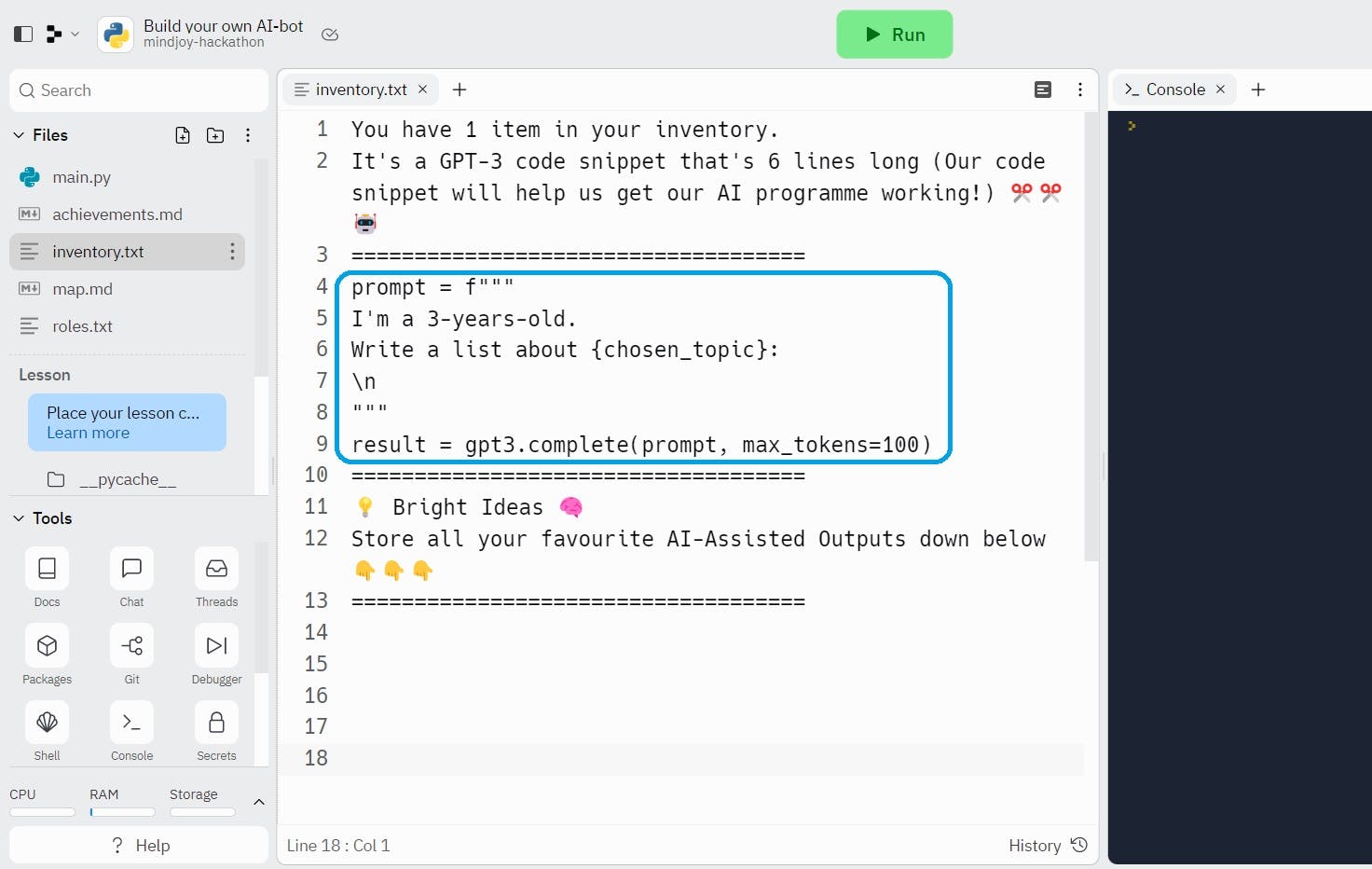

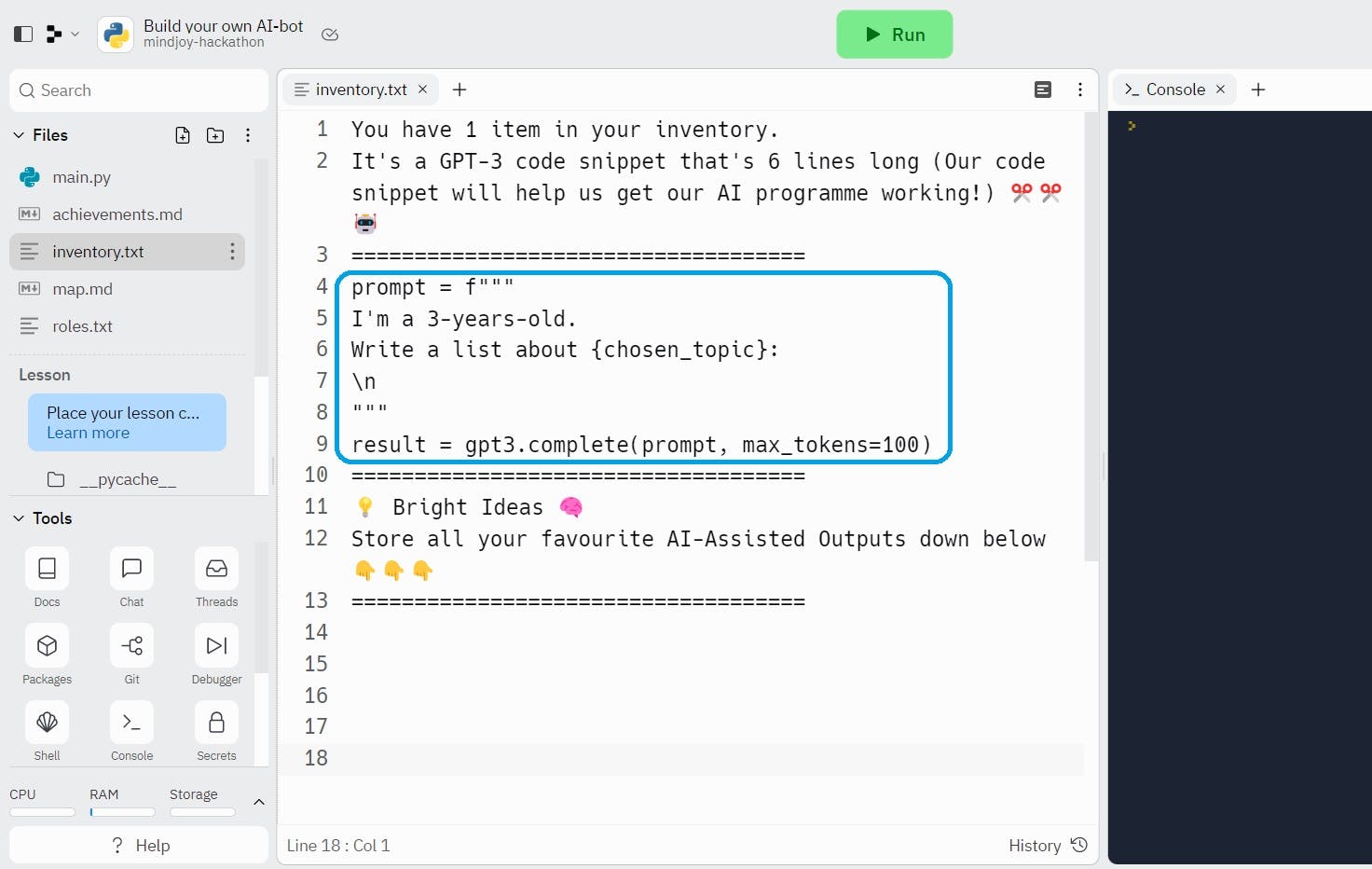

We named the files in the project similarly to games like Minecraft. There’s the “map” file which explains how the learners can navigate through the project, as well as the different functions of the various elements of the platform. There’s the “inventory” file where the learners find their coding prompt to get the AI working, as well as where they can store their AI outputs and keep track of their progress. There is also an “achievements” file, where learners can find their missions and mark them off as they complete them.

When the learners start their project, their AI is not yet functional. They have to find a piece of code (the prompt) and insert it into their main coding file in order to get their programme working.

Once they have input the code snippet into the main file, they click the “run” button and see their AI become active in the console. The facilitators then walk the learners through the step-by-step method to interact with the AI and get the learners to write simple lists with it.

We set the project up in this way to create familiarity for the learners (as most young students have played games) and to encourage their curiosity. What we have noticed is when young learners are playing games they don’t need as much instruction, as they will naturally explore, test and try things. We want to encourage this approach and way of thinking in the Hackathons.

The goal of this project

Navigating through the files

The code snippet

Writing lists using AI

What we noticed

Interestingly there were a few common trends we discovered from the very beginning.

In project two...

The second project is called “Practising Prompts”. In this project the students learn how to engineer prompts.

A prompt is a natural language processing (NLP) concept that involves writing descriptions that act as inputs for AI generators. In other words, the prompt gives the AI information to help it yield desirable results.

The first few exercises ask the students to do simple homework tasks like getting the AI to write facts about global warming and then asking it to write an essay about climate change. To do this effectively, they have to identify the ways in which the prompt is impacting the AI’s output. The first task, for example, is to change the age of the AI (we set the default age as 3 year’s old). They begin to notice how increasing the age can make the outputs more complex and interesting.

We also set the default output as a list - the learners have to identify where in their code the AI has been prompted to write lists and change it, in order to get essays.

As they progress in this project, the questions invite more and more creativity - prompting them to write stories, poems and even movie scripts. They’re also instructed to give the AI more personal characteristics (things like emotion, a vocation, opinions, likes and dislikes, etc) and test how the AI’s outputs change as a result.

This project is also where we prompt the learners to play and experiment with some of the python commands, like changing the colour of the text, using variables to change the AI’s name and using the print command to change the introductory statements in the console.

The goal of this project

What we noticed

Outputs according to the alterations to the code snippet

In project three...

The third project is called “Push the limits with GTP- 3.” This project prompts the learners to think more deeply about how GPT-3 is giving them outputs. This project asks the learners about whether they think the outputs are always factual, whether they think the AI is biased and to test whether the outputs are unique or plagiarised.

Here they are encouraged to hypothesize and test their theories through trial and error.

The goal of this project

What we noticed

This project opens up a lot of interesting questions from the students about ethics and what it means for the future.

A personalised prompt

A collection of the AI’s outputs when prompted for jokes and bias, stored in the learners’ inventory file.

School Song remix

While facilitating hackathons in Kwa-Zulu Natal our facilitators came up with the idea to get the learners to prompt the AI for new variations of their school songs. Below are some of the actual outputs which they obtained.

Durban Girls’ College

Kearsney College

Waterfall College

Different points of view in one output: Learner asked the AI to write about Math from a teacher’s point of view (green) and a learner’s point of view (white)

HOW EDUCATORS RESPONDED

As mentioned earlier, the educator’s responses often told us a lot about the culture of curiosity at a school. Schools with a stronger culture of curiosity often had teachers walking around the room and asking learners about their projects, while other teachers seemed more interested in outsourcing the experience and leaving it to the facilitators.

Of the 50 schools we went to, only 6 educators joined in and started their own AI project, and used GPT-3 to set exam papers or comprehension tests (with questions and answers).

The majority of feedback we received from educators was surprise at how we engaged learners through our coaching approach - even those learners they perceived to be generally less focused or perceived to be non-coders. We received very little feedback or questions from educators about the technology itself and its potential or use-case in the classroom.

Many educators were surprised that we managed to get learners coding using Python (a majority of the schools primarily use block-based coding) and were excited by the potential of this newer language.

Their general feedback to the learners, when asked about whether it could do their homework or not, was that they knew the student’s well enough to be able to identify whether it was their work or not (and that the students wouldn’t be able to “get away” with using it to do their homework).

STUDENT COMPREHENSION

At the end of every session, facilitators would wrap up the Hackathon with reflection questions. Here are some of the questions we asked and how students responded:

The resounding answer was always yes. Some students specified that it depends on how well you learn to prompt it.

3. What do you think are the cons of this technology?

It was incredible to witness the difference between the knowledge and engagement of the students at the start and at the end of each Hackathon. At the start of each session, the students thought the AI was simply pulling information from Google. By the end of the session, after discussions about bias and plagiarism, there were many interesting questions and discussions about what creativity is and whether an AI can indeed be creative. Many students agreed that the AI was being creative, but this raised questions for them around originality.

Many students asked whether they would get in trouble for using this AI to actually do their homework (especially since they discovered it does not plagiarise), and were shocked when they learned that there is still a lack of legislature and monitoring around the use of AI. This opened many debates about the ethics of using it.

A large majority of the feedback from learners expressed a positive sentiment regarding the possibilities and potential of AI - this was in contrast to the adults who experienced this project, who often expressed concern about how this would change certain industries or how they will need to upskill. For adults, the fear surrounding this AI is around becoming irrelevant. For most young learners, the fear is around cyber-security - more specifically, after realising how smart and efficient the AI is, they express concern that it can be used for more insidious purposes (when asked what these insidious purposes might be, most learners spoke about use of private data).

Overall the learner’s sentiments around AI were largely positive. It was fascinating to witness their creativity and the excitement that came from their sense of autonomy (when asked what their favourite part of the experience was, about 45% of learners enjoyed “being creative without limitations”).

Want to print your doc?

This is not the way.

This is not the way.

Try clicking the ··· in the right corner or using a keyboard shortcut (

CtrlP

) instead.