Share

Explore

Data Engineer Cert. - DataCamp

Data Engineer Cert. - DataCamp

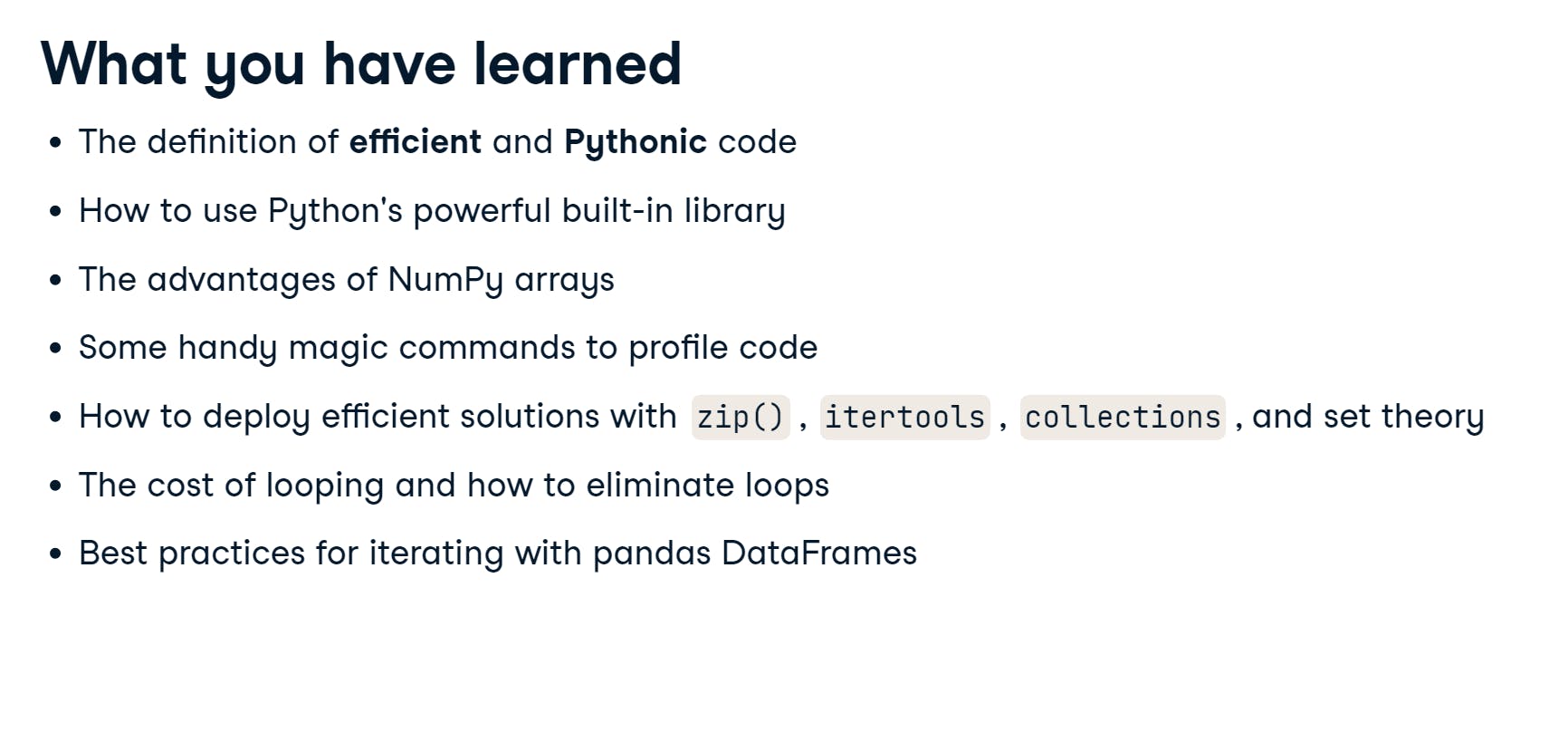

!Writing Efficient Code in Python

Build-in functions

Timing and profiling

Gaining Efficiencies

Pandas optimizations

!Writing Functions in Python

Intro to Shell

file listing

print working directory

change directory

move up one level

change to home directory

copy file

move file (can also use for renaming file)

remove file

remove directory

view file content

see first 10 lines of file

see first 3 lines of file

list everything below current directory

select column 2 to 5 and column 8 using , as separator

-f : field

-d : delimiter

search for pattern in file

-c : print a count of matching lines instead of the lines

-h : do not print the filename when searching multiple files

-i : ignore case

-l : print the names of files that contain the matches

-n : print line number of matching lines

-v : invert match

store first 10 lines in filename.csv to store.csv

store first 5 lines from filename.csv to be input for the next command

print number of character -c , word -w , or line -l in a file

* matches any characters at any length

? matches a single character

[...] matches any one of the characters inside

{...} matches any of comma separated patters inside

sort output

-n numerical order

-r reversed order

-f fold case (case-insensitive)

-b ignore leading blank

remove adjacent of duplicate lines

assign text.txt to a variable called filename

print value contained in the variable filename

print name of every file in the folder directory

open filename in editor

ctrl + k cut a line

ctrl + u paste a line from clipboard

ctrl + o save the file

ctrl + x exit the editor

run commands in script.sh

Data Processing in Shell

data download

client url download data from http or ftp

-O download with existing filename

-o newname download and rename to newname

-L redirect HTTP if 300 error code occurs

-C resume previous file transfer if it times out before completion

www get > native command to download files

better than curl for multiple file downloading

-b background download

-q turn off wget output

-c resume broken download

-i download from list given in a file

--wait=1 wait 1 second before download

csvkit

convert the first sheet in filename.xlsx to filename.csv

list all sheet names

convert sheet sheet1 to filename.csv

preview filename.csv to console

df.describe in console

list all column names in filename.csv

return column index 1 (regarding result from csvcut -n ) from filename.csv

can be used as -c “column name” as well

filter by row using exact match or regex

must use one of the following options

-m exact row value

-r regex pattern

-f path to a file

filter filename.csv where column name == value

stack file1.csv and file2.csv together and save to allfile.csv

create a special column name source (instead of the default group)to identify which row comes from which file

SQL

connect to database sqlite:///database.db using query SELECT * FROM base and save to filename.csv

use the above query to select data from local filename.csv file

can use for multiple csv but the bases should appear in order according to SQL query

insert filename.csv to database

--no-inference disable type parsing (consider everything as string)

--no-constraints generate schema without length limit or null check

cron job

Add as job that runs every minute on the minute to crontab

there are 5 * to indicate time for a cron job

list all cron jobs

Intro to Bash

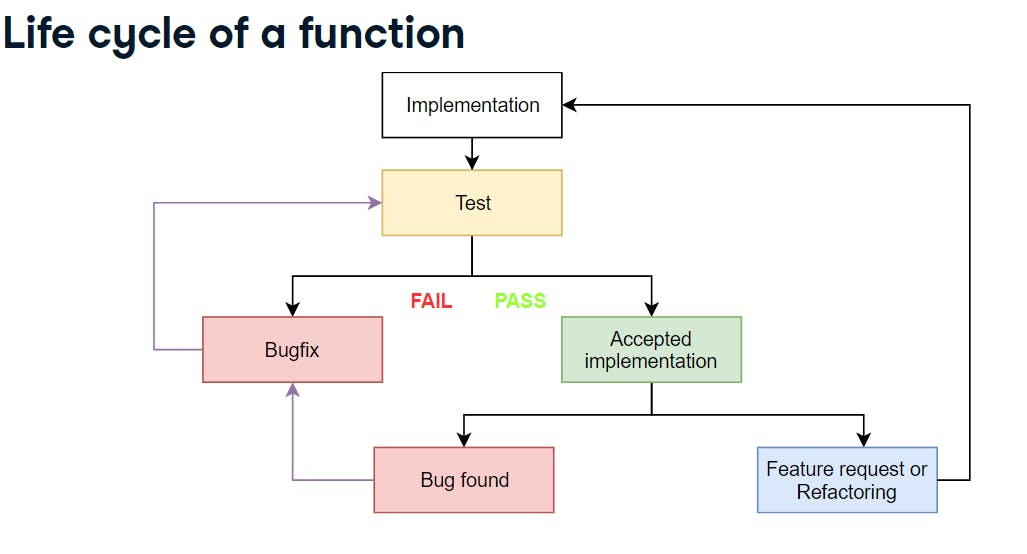

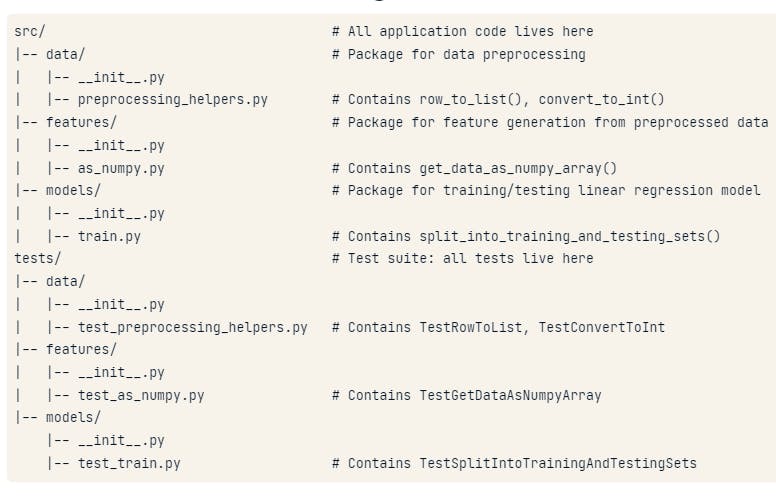

!Unit Testing

!Object Oriented

Intro to Airflow

Airflow Operator

Bash Operator

Python Operator

Sensor

File sensor

Other sensors

Template

Branching

Running DAGs and Tasks

Intro to Spark

ML Pipeline in Spark

!AWS Boto

S3

Permission

SNS

Intro to Relational Database

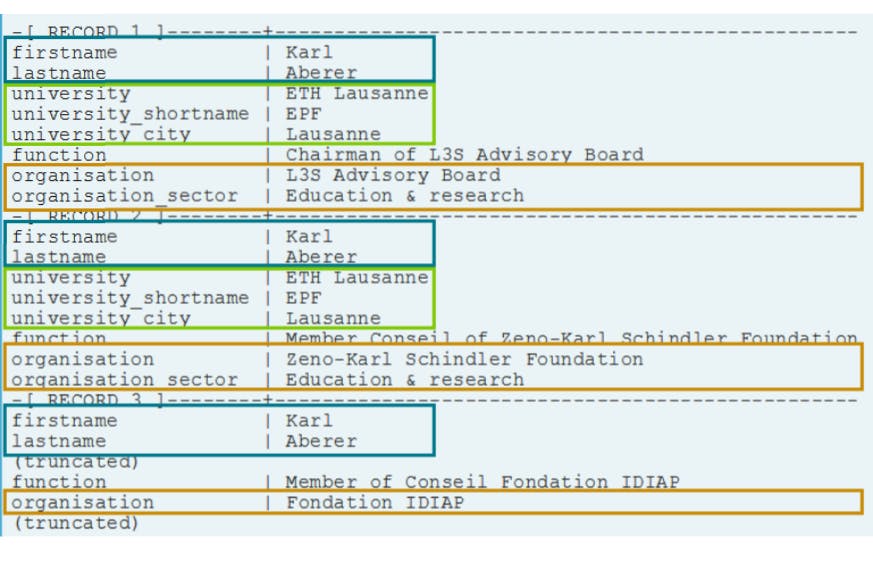

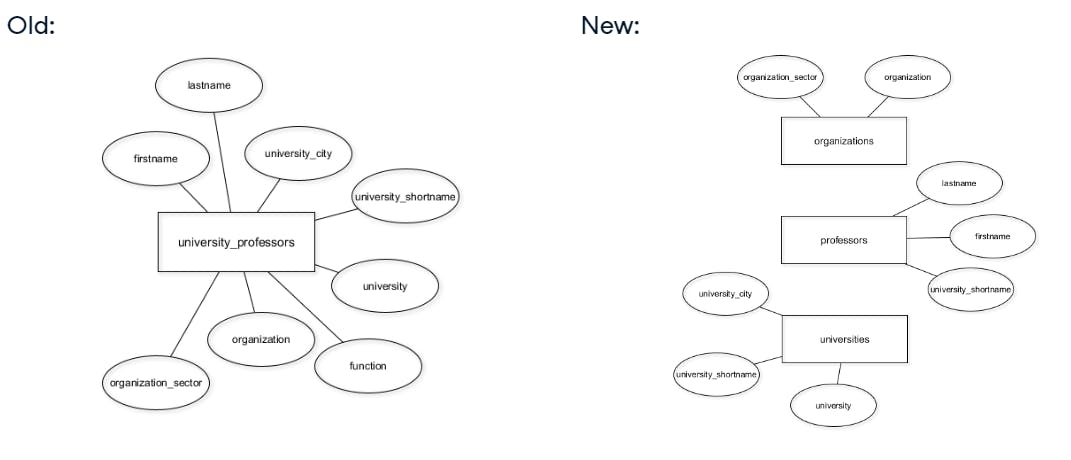

Relational Database

Example

Migrate the university_professors table to new schema

Data constraints

Attribute constraints

Key constraints

Referential integrity constraints

Database Design

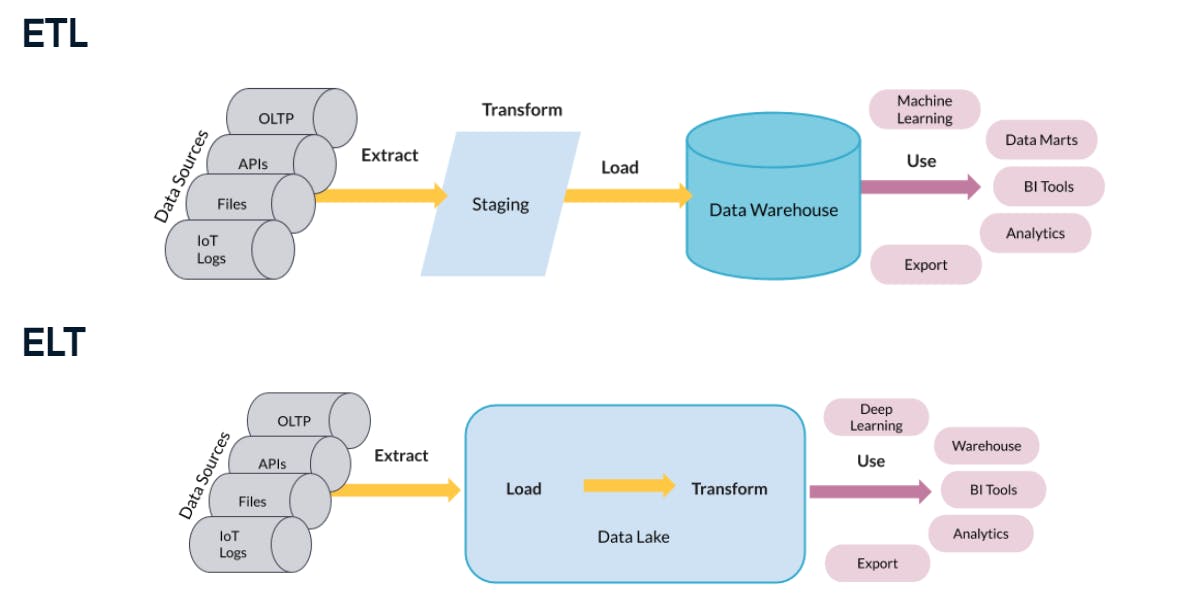

Structuring data

Data modeling

Dimensional modeling

Elements of dimensional modeling

Normal forms

1NF

2NF

3NF

Database views

Table partitioning

Intro to Scala

Scala as a compiler

Function

Array

List

if

while

Big Data with PySpark

PySpark

Inspect currently using Spark version

Inspect currently using python version

Inspect URL of the cluster or “local” when run in local mode

Load list values into SparkContext

Load string Hello to SparkContext

Load local file text.txt to SparkContext

Resilient Distributed Datasets (RDD)

Load list to sparkcontext with minimum 4 partitions to store the data

RDD Operations

Transformation

return [1,4,9,16]

return [3,4]

return [”hello”, “world”, “how”, “are”, “you”]

return [1, 2, 3, 4, 5, 6, 7]

Actions

rdd_map.collect() returns [1,4,9,16]

rdd_map.take(2) returns [1,4]

rdd_map.first() returns [1]

rdd_map.count() returns 4

Pair RDDs

create pair RDD from a list of key-value tuple

Transformations

return [(”name1”, 23), (”name2”, 66), (”name3”, 12)]

return [(66, "name2"), (23, "name1"), (12, "name3")]

return [ ("DE", ("Munich", "Berlin")), ("UK", ("London")), ("NL", ("Amsterdam")) ]

return [ ("name1", (20, 21)), ("name2", (23, 17)), ("name3", (12, 4)) ]

Actions

return 14

return ("k1", 2), ("k2", 1)

return {"k1": 3, "k2": 6}

PySparkSQL

DataFrame Operations

Transformations

Actions

SQL Queries

Visualization

MLlib

Collaborative Filtering

Classification

Cleaning Data with PySpark

Parquet file

Working with Parquet

DataFrame

Caching

Performance

Shuffling

Pipeline

!Intro to MongoDB

Want to print your doc?

This is not the way.

This is not the way.

Try clicking the ⋯ next to your doc name or using a keyboard shortcut (

CtrlP

) instead.